Since the rise of generative AI, large language models (LLMs) have become a key driver of digital transformation for enterprises. From automating processes and enhancing customer experience to accelerating innovation, their potential seems limitless.

But this promise comes with a paradox: the more powerful the models, the greater the challenges in terms of data sovereignty, regulatory compliance, and costs. This is where hybrid LLMs come in.

Hybrid LLMs are a new approach that combines the strength of large models with the agility of smaller ones, while guaranteeing the highest level of security.

How do Hybrid LLMs differ from a standard LLM?

A standard LLM is a single model. A Hybrid LLM combines multiple models (large and small), orchestrated by an intelligent router. This makes it faster, more efficient, and more secure.

What is the role of the “router” in a Hybrid LLM system?

The router analyzes each request and directs it to the most appropriate model. Simple queries go to a lightweight SLM, while complex ones are routed to a large-scale LLM.

How do Hybrid LLMs relate to RAG (Retrieval-Augmented Generation)?

RAG enriches a Hybrid LLM with reliable, enterprise-specific data, enabling it to generate more precise and contextualized responses — an essential feature for regulated industries.

Which industries benefit the most from Hybrid LLMs?

Regulated industries such as banking, insurance, healthcare, and government. More broadly, any company handling strategic or sensitive data can benefit from this balanced approach.

What Exactly Is a Hybrid LLM?

A hybrid large language models is not a single model but a system that combines large language models (LLMs) for complex tasks with small language models (SLMs) for lightweight queries.

Hybrid LLM is an orchestrated system that brings together different language models to leverage the best of each.

- Large Language Models (LLMs): built for complex tasks that require deep language understanding and advanced generation capabilities.

- Small Language Models (SLMs): faster, cheaper, and ideal for simple, lightweight requests.

At the core lies an intelligent routing mechanism (LLM routing). Based on the sensitivity and complexity of each request, the router decides which model to activate.

This logic is similar to Mixture of Experts (MoE) architectures, where different “experts” are called upon depending on the need. In this way, a Hybrid LLM becomes an adaptive ecosystem, optimizing performance, speed, and cost while ensuring compliance.

How Hybrid LLMs work

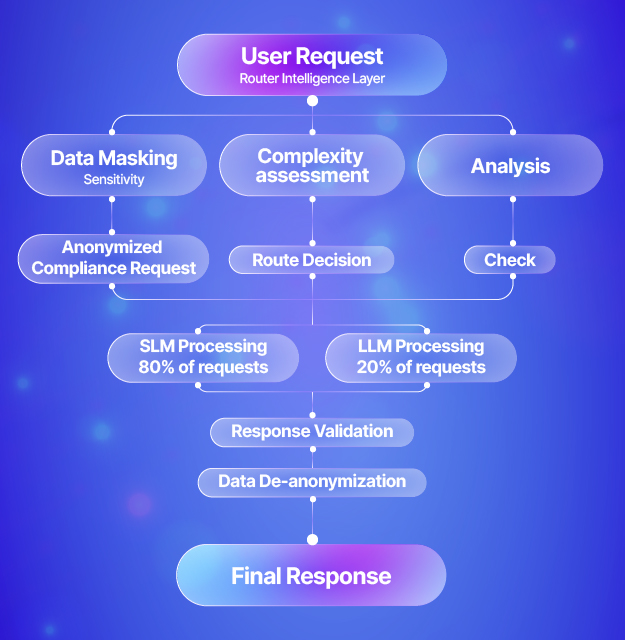

When a user submits a request to a Hybrid LLM system, it goes through a sophisticated four-stage process:

Hybrid LLM is an orchestrated system that brings together different language models to leverage the best of each.

At the core lies an intelligent routing mechanism (LLM routing). Based on the sensitivity and complexity of each request, the router decides which model to activate.

This logic is similar to Mixture of Experts (MoE) architectures, where different “experts” are called upon depending on the need. In this way, a Hybrid LLM becomes an adaptive ecosystem, optimizing performance, speed, and cost while ensuring compliance.

Request Analysis & Classification

The system’s intelligent router analyzes incoming requests using multiple criteria:

- Complexity assessment: Query length, linguistic complexity, and required reasoning depth

- Data sensitivity detection: Automatic identification of PII, confidential business data, or regulatory-sensitive information

- Task categorization: Classification into predefined categories (FAQ, document analysis, creative writing, technical support, etc.)

- Performance requirements: Real-time vs. batch processing needs

Anonymization & Data Protection

For sensitive requests, the system applies multi-layer protection:

- Dynamic masking: Personal identifiers, financial data, and proprietary information are temporarily replaced with anonymized tokens

- Context preservation: The semantic meaning is maintained while removing identifying elements

- Audit trail creation: All data transformations are logged for compliance verification

Intelligent Model Selection

Based on the analysis, the router makes optimal routing decisions:

Route to Small Language Models (SLMs) when:

- Simple FAQ or informational queries

- Routine customer service interactions

- Basic document summarization

- Standard form processing

Route to Large Language Models (LLMs) when:

- Complex reasoning or analysis required

- Creative content generation

- Multi-step problem solving

- Technical documentation creation

Response Processing & De-anonymization

Once the appropriate model generates a response:

- Quality validation: Response accuracy and completeness checks

- Data restoration: Anonymized elements are restored to their original form

- Final security scan: Ensures no sensitive data leaked during processing

Newsletter

Subscribe to our newsletter for the latest digital insights, tips, and news.

Benefits of Hybrid LLMs for Enterprises

Hybrid LLMs offer enterprises the ability to combine the power of large language models with domain-specific expertise, enhancing decision-making and operational efficiency. They enable scalable, real-time insights, personalized customer experiences, and seamless integration across diverse business functions.

Public LLMs: Fast but Risky

Public large language models are accessible, fast, and powerful. Yet, sending sensitive business or customer data outside the organization introduces major risks:

- Data sovereignty is lost.

- Regulatory requirements like GDPR, HIPAA, and ISO 27001 is harder to evidenceEU .

- Audits cannot be assured when data leaves your control, leaving companies exposed legally.

Private LLMs: Safe but Costly

Private deployments protect data and sovereignty, but they come at a steep price:

Millions in infrastructure and hardware.

- A team of experts to maintain and update models.

- Scalability challenges as user bases grow.

For many enterprises, neither choice is acceptable.

Why HybridLLM Changes the Game

Hybrid LLMs revolutionize business operations by blending advanced AI with industry-specific knowledge, providing more accurate and contextually relevant solutions. They enable enterprises to leverage both the power of general AI and the precision of tailored models, driving innovation and competitive advantage.

Performance Optimization

By using SLMs for routine queries and reserving LLMs for complex tasks, hybrid systems deliver low latency and built-in scalability. The result is consistent performance, even at enterprise scale.

Typical performance metrics:

- SLM response time: 200-500ms for simple queries

- LLM response time: 1-3 seconds for complex tasks

- Hybrid system: well-tuned Hybrid system often deliver sub-second responses (hundreds of milliseconds TTFT reported for 7B-class models).

Data Sovereignty and Privacy

With GDPR, the Swiss DPA, and the European AI Act (entered into force 1 Aug 2024) in play, enterprises must ensure their sensitive data remains protected. Hybrid architectures allow critical data to be processed locally, while only non-sensitive information is routed externally.

Flexibility and Customization

By design, Hybrid LLMs are agile: they can integrate both open-source and proprietary models. This gives organizations a truly tailored AI system, aligned with their processes and scalable over time.

Cost Efficiency

Private LLM deployments are expensive to build and maintain. By combining heavy and lightweight models intelligently, Hybrid LLMs significantly reduce infrastructure and API costs, making them a cost-efficient LLM approach.

Practical Applications of Hybrid LLMs

Hybrid LLMs can be applied across various sectors, enhancing customer support with intelligent chatbots, optimizing content generation, and automating complex decision-making processes. They also improve predictive analytics, supply chain management, and personalized marketing strategies, driving operational efficiency and growth.

Enterprise AI Systems

An industrial company can deploy a Hybrid LLM to analyze internal documents, generate automated reports, or assist employees — without risking data leaks.

Customer Service

A chatbot powered by Hybrid LLM can instantly answer FAQs through an SLM, while routing complex inquiries to a more powerful LLM. The result: a seamless, secure, and efficient customer experience.

RAG (Retrieval-Augmented Generation)

Hybrid LLMs often integrate with RAG, which enriches models with reliable internal data. This ensures responses are accurate, contextualized, and compliant, a crucial advantage for regulated sectors.

Challenges of Hybrid LLM Adoption

The adoption of Hybrid LLMs can be challenging due to the complexity of integrating AI with existing systems, requiring specialized knowledge and resources. Additionally, enterprises may face difficulties in ensuring data quality, maintaining model transparency, and addressing ethical concerns related to AI decision-making.

As promising as this technology is, it also presents challenges:

- Deployment complexity: integrating a Hybrid LLM requires advanced orchestration and robust infrastructure.

- Data governance: defining what data stays local and what can be processed externally requires a clear framework.

- Regulatory balance: organizations must navigate innovation while remaining compliant with evolving legal frameworks.

For this reason, few enterprises can build such solutions internally. Partnering with trusted experts is essential to ensure both technical excellence and legal compliance.

Future Outlook of Hybrid LLMs

The future of Hybrid LLMs looks promising, with advancements in AI and machine learning driving more sophisticated, context-aware solutions. As they become increasingly accessible, enterprises will leverage these models to enhance automation, improve personalized experiences, and unlock new avenues for innovation and growth.

In the coming years, hybrid models are expected to become the new standard in enterprise AI.

- They will play a central role in driving operational excellence, automating tasks, and fostering continuous improvement.

- Their adoption will spread across regulated industries, enabling organizations to combine power with compliance.

- They will evolve alongside generative AI advances, especially in multimodal approaches (text, images, video, structured data).

Within five years, Hybrid LLMs are likely to be the default choice for large organizations across Europe.

Last Thoughts

Hybrid LLMs provide a concrete answer to the current enterprise dilemma: how to leverage the power of advanced AI while protecting sensitive data and controlling costs.

By combining performance, customization, security, and compliance, they act as a bridge between innovation and regulation.

The future of enterprise AI is hybrid.

Commonly asked questions FAQ

How can an enterprise get started with Hybrid LLMs?

Organizations should begin by identifying critical use cases where performance and compliance are equally important (e.g., internal reporting, chatbots, or document analysis). Hybrid LLMs can then be integrated progressively into existing infrastructure.

How does HybridLLM ensure data protection?

HybridLLM uses local anonymization and masking to protect sensitive information before it is processed. Data never leaves the enterprise’s secure environment unprotected, ensuring data sovereignty and compliance with ISO 27001, Swiss DPA, and other international regulations.

What's the difference between AI and LLMs?

AI (Artificial Intelligence) encompasses a broad range of technologies designed to mimic human intelligence, including machine learning, computer vision, and robotics. LLMs (Large Language Models) are a specific type of AI focused on processing and generating human language, leveraging vast datasets to understand and produce text with remarkable accuracy.

Newsletter

Subscribe to our newsletter for the latest digital insights, tips, and news.